Apache Iceberg vs Delta Lake: Ultimate Guide for Data Lakes

You've probably heard the buzz around Apache Iceberg and Delta Lake. If you're deep into big data, picking the right one can be tricky. So let's skip the hype and focus on what really matters for batch analytics and ML pipelines.

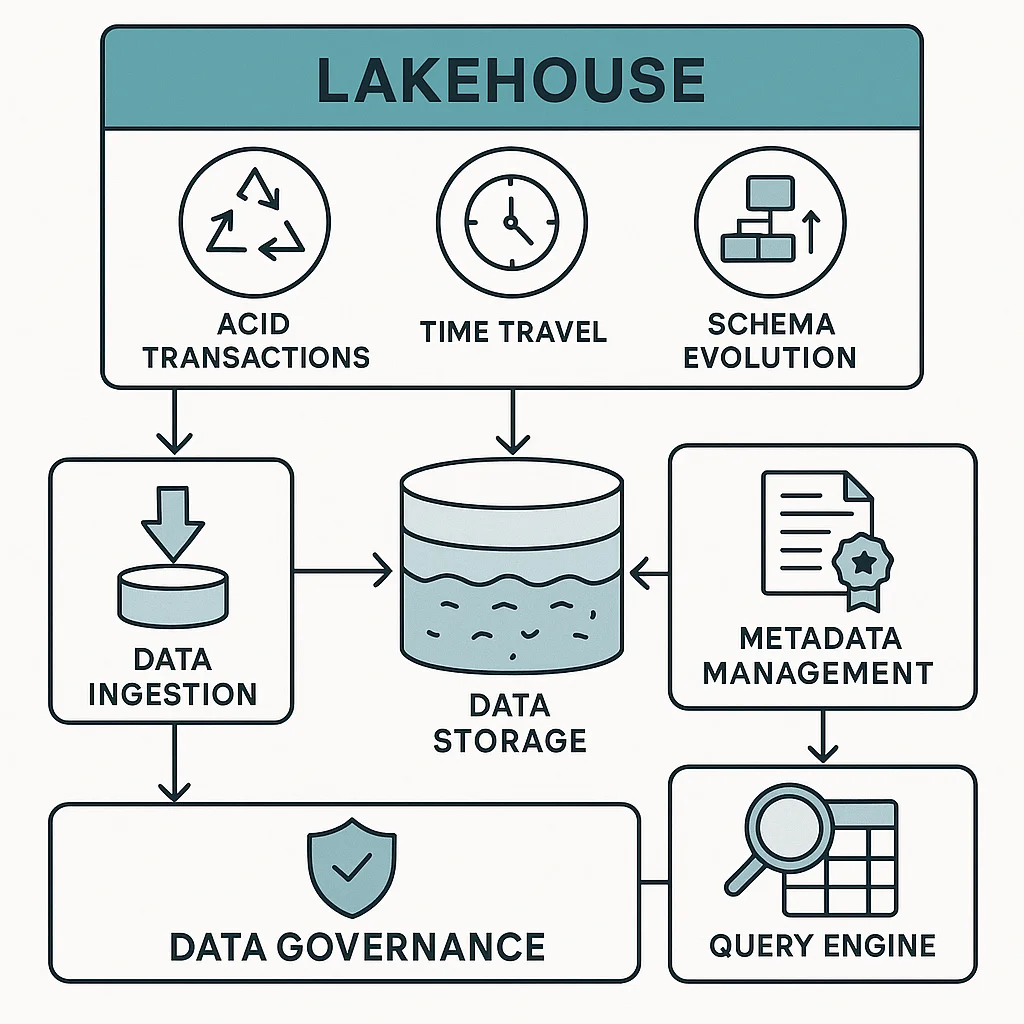

Open table formats are the game-changer for data lakes - they bring ACID transactions, time travel, and schema evolution to cheap object storage. That's warehouse-grade power on a lakehouse budget.

What This Comparison Is About

We're zooming in on use cases like ETL pipelines, feature engineering, and reproducible training runs where consistent, reliable data matters most.

Here's why formats like Iceberg and Delta shine in these workflows:

- Time travel & snapshots: Train models from a specific version, enabling reproducibility that truly works.

- ACID operations: MERGE, UPDATE, DELETE, essential for managing feature tables and late-arriving data.

- Schema evolution: Add or update fields without rewriting history, because nobody's got time for that.

Performance & Scalability: Where the Rubber Meets the Road

Performance is often the deciding factor and rightly so. The good news? Both formats are built to handle large-scale datasets efficiently, though each has its own strengths depending on the workload.

File Layout & Updates

Delta Lake uses a copy-on-write approach by default for the open-source version. When you need to update data, it creates new files and marks the old ones for deletion. The new Deletion Vectors (DVs) feature is pretty clever, it marks row-level changes without immediately rewriting entire files, which saves you from write amplification headaches. Databricks offers DVs as a default for any Delta tables.

Iceberg takes a different approach with its equality and position deletes for V2. The new Format v3 introduces compact binary Deletion Vectors that reduce both read and write amplification, especially helpful for update-heavy tables.

OSS Delta Deletion Vectors Are Limited

Here's a key detail for folks using open-source Delta: Delta Lake's Deletion Vectors (DVs) are now broadly GA(general availability) on Databricks runtimes, whereas in open-source Delta (OSS) the capability is still "emerging": you can read DVs from 2.3.0 onward and perform DELETE, UPDATE, and MERGE with them as of 3.1, yet many connectors, libraries, and sharing tools still lack full read or write-side support.

Query Planning at Scale

Apache Iceberg leverages a hierarchical metadata model using manifest lists, which efficiently summarize partitions and files. This allows the query planner to skip costly object storage scans, enabling fast query planning even across millions of files, a major performance win for large-scale datasets.

Delta Lake, on the other hand, uses a transaction log with periodic checkpoints. When maintained properly, it performs well, but planning might slow down for very large tables if checkpointing and optimize/vacuum routines aren't regularly performed. Maintaining a healthy checkpoint cadence is key to ensuring consistent performance at scale.

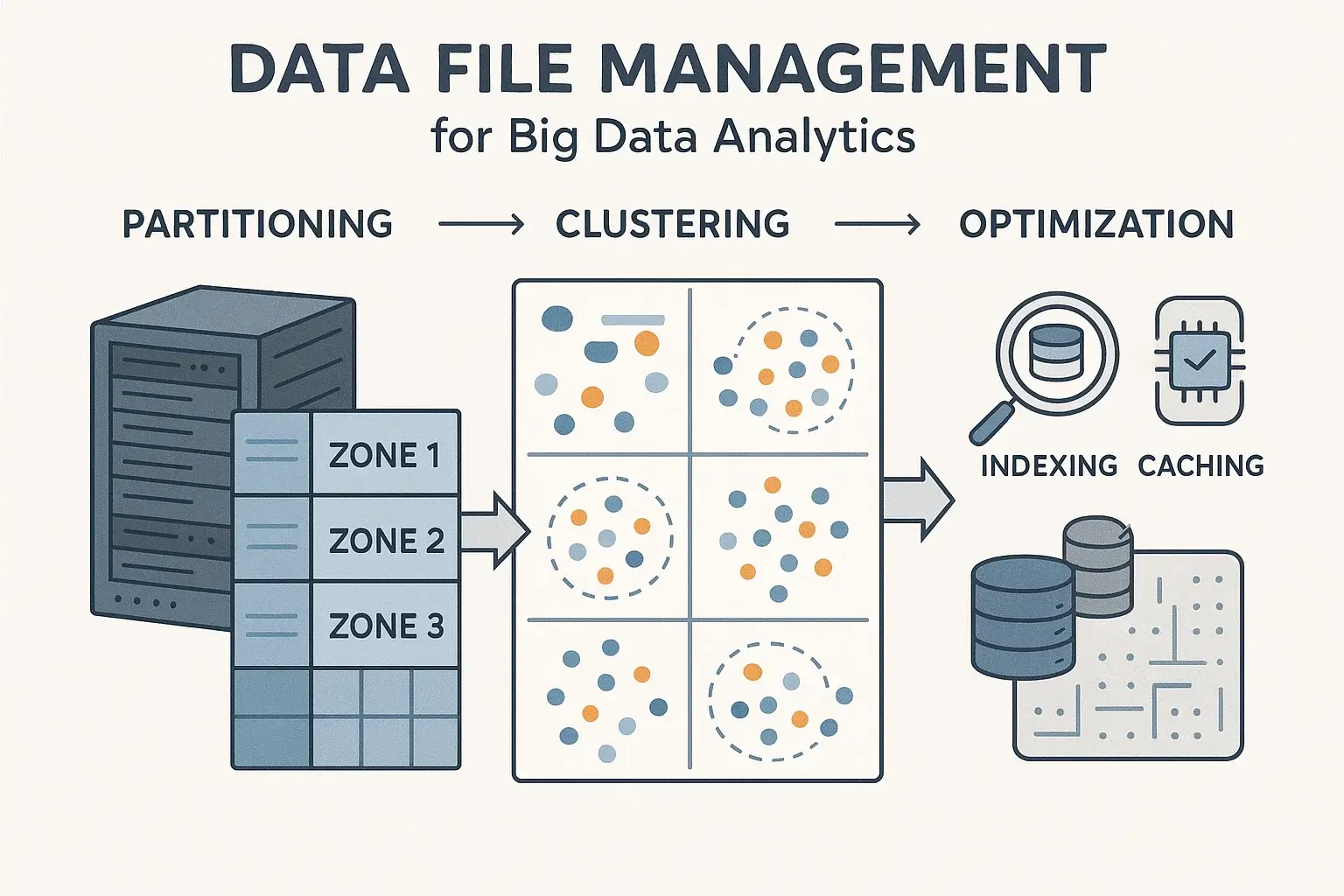

Partitioning, Clustering & Data Skipping

Partitioning plays a key role in Delta Lake performance. Recently, Delta has introduced Liquid Clustering as a smarter alternative to static partitions and Z-ORDER. It allows redefining clustering keys without rewriting existing data, a big win, and it has shown strong performance gains in real-world use cases.

That said, Iceberg supports clustering too, but with more flexibility. It offers partition transforms and true partition evolution, so you can modify partition strategies over time without rewriting historical data. Additionally, Iceberg supports Z-ordering and file sort optimization via the rewrite_data_files action.

TAKEAWAY?

With proper layout, both formats can saturate compute for large scan‑heavy analytics. Delta often excels in update‑heavy streaming/near‑real‑time scenarios (COW + DVs), while Iceberg shines at massive partition/file counts thanks to its planning model and partition evolution.

Ecosystem Integration: Where the Real Differences Show

This is probably the most important section for your decision-making, especially when we look at those limitations on support with different engines.

Multi-Engine Support & Performance

Iceberg's multi-engine integration is an industry benchmark - no other format matches its breadth and maturity:

- Spark & Flink: Native readers and writers deliver full support for streaming and batch, time travel, partition evolution, and schema flexibility.

- Trino/Presto: Iceberg is perfectly integrated and widely used for federated ad-hoc analytics, supporting advanced features out-of-the-box.

- ClickHouse & Doris: Production-grade readers let you tap into Iceberg tables for ultra-fast OLAP and analytics, with support for key features like partition pruning and time travel.

- Hive/Impala, Dremio, DuckDB, StarRocks, BigQuery, Snowflake, Redshift: Extensive catalog and metadata support enables you to query and govern your Iceberg tables across almost any environment, cloud, or workflows.

Delta Lake offers compatibility with engines like Spark (where it excels), Flink (Maturing), Trino (Matured), and read-only for: Presto, Hive, BigQuery, Athena, and others. It's added features such as Delta Standalone Reader and Delta UniForm to enhance cross-platform access. However, advanced features and the smoothest experience (Ex, Deletion vectors, enterprise level governance) are generally still found within Spark and Databricks environments.

However its cross-query-engine support isn't as universally mature or engine-neutral as Iceberg.

Cloud Services Integration

AWS: Athena natively queries (Read-Write-Optimise) Iceberg. Amazon S3 Tables offer fully managed Iceberg with automatic optimization, maintenance, and REST Catalog APIs for wide tool integration.

Azure: Synapse Analytics and Microsoft Fabric recently started the support for Iceberg. Azure Data Factory can write in Iceberg format to Data Lake Storage Gen2. Azure Databricks supports managed Iceberg and hybrid scenarios with UniForm (currently at a nascent level). It has out of the box matured support for Delta.

Snowflake: Provides managed Iceberg tables with choice of internal or external catalogs. Lifecycle maintenance is automated with Snowflake-managed mode, while external catalogs allow custom integration.

Google Cloud: BigQuery BigLake delivers managed Iceberg tables, with open-query-engine access and full support for mutations, schema evolution, and streaming. Also BQ can read external Iceberg tables as well.

Databricks: Offers managed Delta and Iceberg tables (Nascent append-only & Databricks managed only) across all clouds - strongest on the Databricks platform.

Check out query-engine support matrix (here)

Catalogs & Governance

Catalogs are like the brain (metadata-management + ACID) for lakehouses and its ecosystem is evolving fast. Apache Polaris (incubating) now unifies Iceberg and Delta Lake tables in one open-source catalog, delivering vendor-neutral management and robust RBAC governance across major query engines.

REST-based options like Polaris, Gravitino, Lakekeeper, and Nessie make Iceberg highly flexible; you can connect multiple warehouses and tools while maintaining a single table format, making multi-tool architectures easy and future-proof if vendor neutrality matters to you (you can avoid being locked-in into one single vendor and take ownership of cost, tools, performance in your own hands.)

In a recent move, Databricks open-sourced the Unity Catalog and its APIs, a major move that now lets you manage Delta (and, increasingly, Iceberg) tables beyond the Databricks platform. It's a step toward making Delta less proprietary and more accessible. However, many top optimizations and enterprise features are still exclusive to the managed Databricks offering, and current Unity Catalog support for Iceberg remains somewhat limited (e.g., append-only operations).

Bottom line: For organizations wanting maximum optionality, Iceberg's open REST catalog ecosystem stands out for governance and seamless integration across any engine. Delta Lake and Unity Catalog deliver the strongest, most turnkey experience within Databricks, but outside the platform, cross-format features and true parity are still catching up.

Enterprise Features: The Compliance & Security Story

Both formats handle the enterprise requirements well, but with different approaches:

- ACID & Time Travel: Both provide snapshot isolation and "as of" queries for audits and reproducibility. This is crucial when you need to prove your ML model was trained on specific data for regulatory compliance.

- Deletes/Updates for GDPR/CCPA: Delta uses COW + Deletion Vectors, while Iceberg provides equality/position deletes and v3 Deletion Vectors. Both can handle right-to-be-forgotten requests, though the implementation differs.

- Schema Evolution: Iceberg's stable column IDs enable safe rename/reorder/type-widening operations. Delta supports metadata-only renames and drops via column mapping.

Real-World Cost Impact: The Numbers Don't Lie

Here's where things get really interesting from a business perspective. DoorDash's migration to Iceberg provides some compelling real-world data:

- 25-49% storage cost reduction compared to their original Snowflake architecture using just default ZSTD compression (ref)

- 40-70% compute cost reduction compared to fully Snowflake based setup due to better resource utilization & reduction of 1 data layer flink-raw & snowflake (snowpipe) raw and combined into 1.

- Millions of dollars saved from migrating their highest volume events to Iceberg format pipelines.

The cost savings were so significant that they could completely remove expensive Snowflake-native storage and ingestion costs while maintaining query capabilities through External Iceberg tables in Snowflake.

The Bottom Line: When to Choose What

Go with Apache Iceberg if you want:

- Maximum vendor neutrality and flexibility to use Spark, Flink, Trino, BigQuery, Snowflake, or Athena over a single table format.

- Cost savings on query and storage (e.g., DoorDash observed up to 40% lower costs after moving from Snowflake-native-storage-format to Iceberg).

- Efficient handling of large datasets with many partitions or evolving access patterns.

- Fine-grained deletes at scale and robust, open governance options.

Go with Delta Lake if you want:

- Turnkey automation for Spark-centric, update-heavy pipelines.

- Deepest integration and fully managed enterprise governance within the Databricks ecosystem.

- The most advanced support for streaming workloads and real-time analytics.

Operational Reality Check

Setup Complexity:

Iceberg: Leverage Hive, AWS Glue, or open REST catalogs like Polaris and Lakekeeper for seamless multi-engine coordination. Engines connect using standard connectors and interact with tables via standard SQL, making it easy to integrate. Currently, you can easily start by opting for Ingestion (OLake, Debezium-Kafka, or Flink), AWS S3 tables (Polaris/Lakekeeper), and any query engine/warehouse (Athena, Trino, BigQuery, Snowflake, or even Databricks-Spark).

Delta: Basic tables can be used path-based right away with Spark. For enterprise features and cross-engine governance, tables are registered in a metastore or catalog such as Unity Catalog, which enables enhanced management and security within supported platforms. The Databricks gives you end-to-end managed Delta tables.

Table Maintenance:

If you are planning to not use managed offering like S3-tables or Databricks:

Iceberg: Schedule expire_snapshots and rewrite data/delete files jobs based on your workload patterns. Schedule compaction jobs manually based on how often your data lands and how your workloads hit those tables.

Delta: Schedule OPTIMIZE (compaction) and VACUUM (file Garbage Collection) based on your workload patterns; maintain checkpoints.

Managed Options:

Iceberg: Amazon S3 Tables offer fully managed Iceberg tables with built-in background maintenance (compaction, optimization), REST-catalog APIs, and seamless multi-engine access (Athena, EMR, Trino, and more). Dremio is also one of the fully managed platforms and ryft.io supports Iceberg compaction and snapshot lifecycle management. Google Cloud now provides managed Iceberg tables in BigQuery BigLake, and Snowflake also supports managed Iceberg; however, both impose notable limitations on external querying/access.

Delta: Databricks provides fully managed Delta tables with deep integration across its own Spark/Photon compute engine and the Databricks ecosystem; cross-engine access is possible via newer standards, but the richest features and optimizations remain on Databricks itself.

OLake

Achieve 5x speed data replication to Lakehouse format with OLake, our open source platform for efficient, quick and scalable big data ingestion for real-time analytics.