S3 Parquet

OLake supports writing in parquet format directly to S3. Before proceeding with the S3 Parquet destination, we recommend reviewing the Getting Started and Installation sections.

Prerequisites

- Before setting up the destination, make sure you have successfully set up the

source. - Review the minimum required IAM Permissions for S3 Parquet Writer.

Configuration

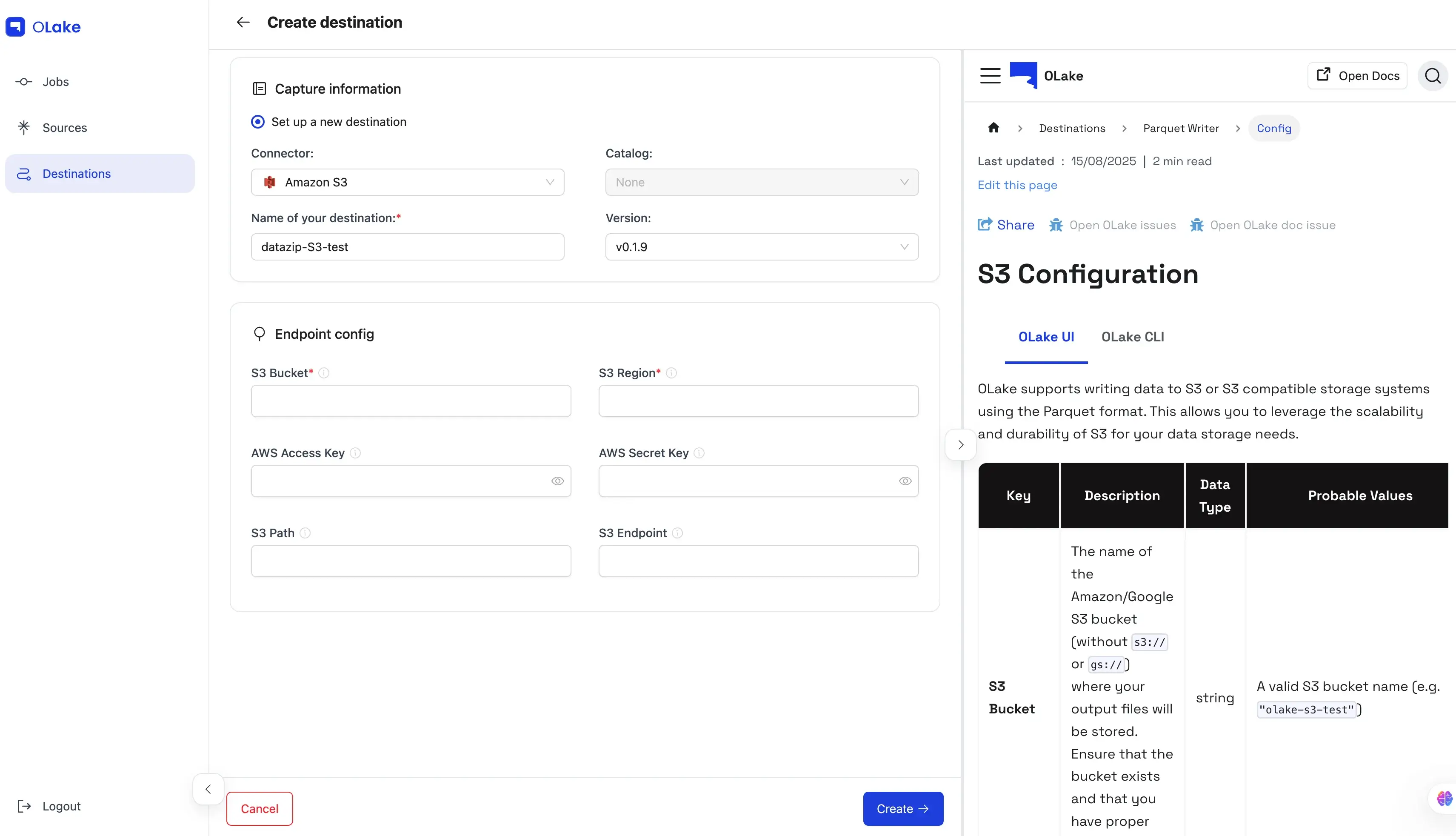

- OLake UI

- OLake CLI

| Key | Description | Data Type | Probable Values |

|---|---|---|---|

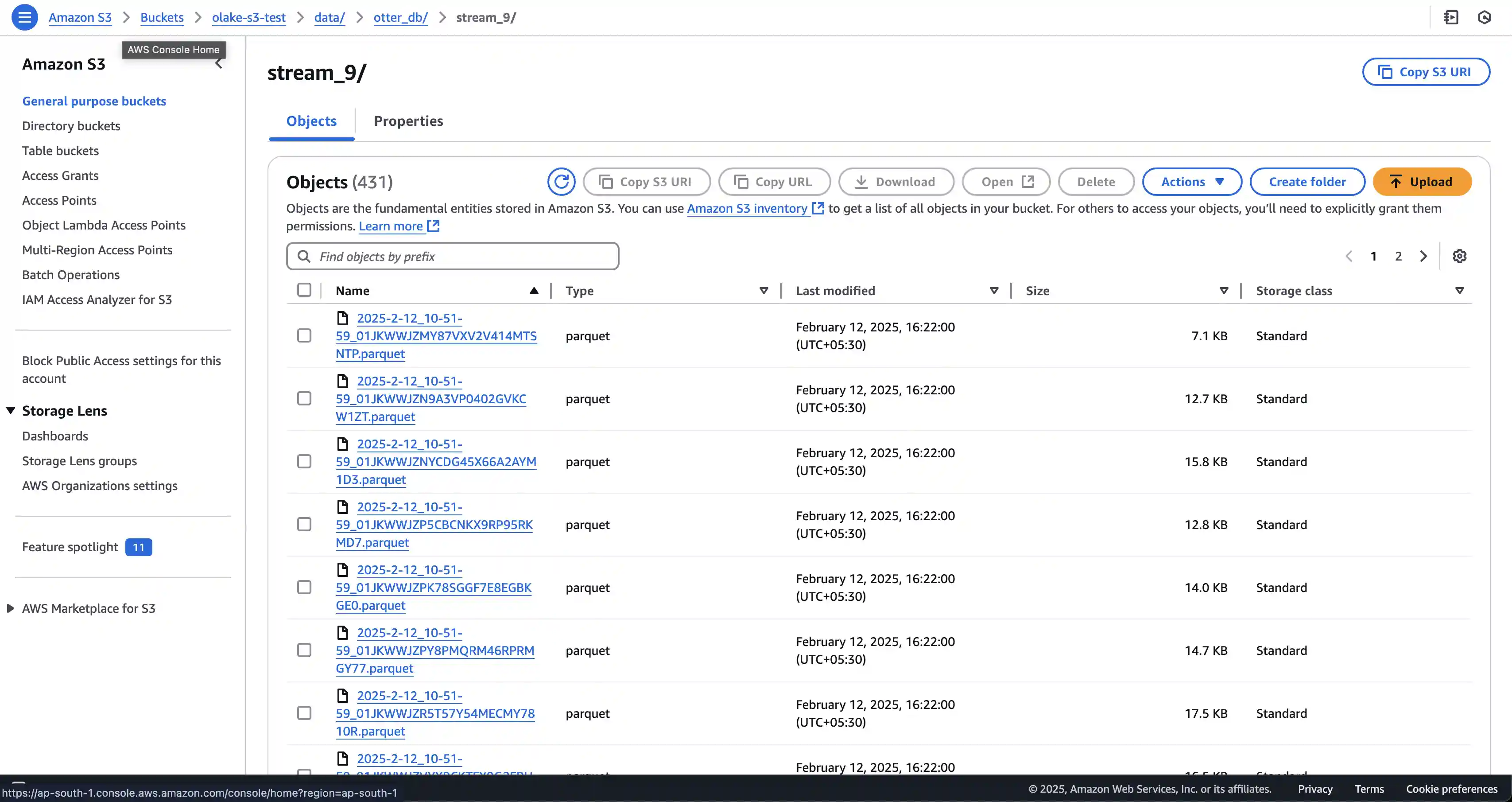

| S3 Bucket | The name of the Amazon/Google S3 bucket (without s3:// or gs://) where your output files will be stored. Ensure that the bucket exists and that you have proper access. | string | A valid S3 bucket name (e.g. "olake-s3-test") |

| S3 Region | The AWS/MinIO/GCS region where the specified S3 bucket is hosted. | string | AWS/GCS region codes such as "ap-south-1", "us-west-2", etc. |

| S3 Access Key | The AWS/MinIO/GCS HMAC access key used for authenticating S3 requests. | string | A valid AWS/GCS HMAC access key |

| S3 Secret Key | The AWS/MinIO/GCS HMAC secret key used for S3 authentication. This key should be kept secure. | string | A valid AWS/GCS HMAC secret key |

| S3 Path | The specific path (or prefix) within the S3 bucket where data files will be written. This is typically a folder path that starts with a / (e.g. "/data"). | string | A valid path string |

| S3 Endpoint | (Optional) Custom S3-compatible endpoint. Required when using GCS HMAC keys or MinIO S3. | string | "https://storage.googleapis.com", "https://<MinIO-Storage-Endpoint>:9000" |

Create an S3-compatible destination in OLake UI

Steps to get started:-

- Navigate to Destinations Tab.

- Click on

+ Create Destination. - Select

AWS S3as the Destination type from Connector drop down. - Fill in the required connection details in the form.

- Click on

Create ->. - OLake will test the destination connection and display the results. If the connection is successful, you will see a success message. If there are any issues, OLake will provide error messages to help you troubleshoot.

This will create a S3 destination in OLake, now you can use this destination in your Jobs Pipeline to sync data from any Source to AWS S3.

Using AWS Amazon S3 Credentials

OLake supports direct syncing of data from source to AWS S3 using Amazon S3 credentials.

For this, refer to IAM permission needed for Amazon-powered AWS S3.

User needs to provide,

- AWS S3 bucket path

- AWS S3 access key and secret key

- AWS S3 region

- AWS S3 path (if any)

- AWS S3 endpoint (required only for s3-compatible storage systems other than AWS S3. Refer to

S3 Endpoint Doc)

If using AWS IAM Role with the required permissions, the AWS Access Key and Secret Key fields can be left blank.

Using GCS-compatible S3 Credentials

OLake supports writing data to Google Cloud Storage (GCS) in parquet format.

Google Cloud Storage provides an S3-compatible interface, allowing you to use S3-compatible tools and libraries to interact with GCS buckets and objects. This interoperability enables seamless integration and ingestion of data into GCS by supporting the Amazon S3 API, which means you can use existing S3 tools and workflows with minimal changes such as updating the endpoint to https://storage.googleapis.com and authenticating via HMAC keys (discussed in next section). This compatibility simplifies migrations, data transfers, and tool usage across platforms.

For role based permissions, refer to GCP IAM Permission.

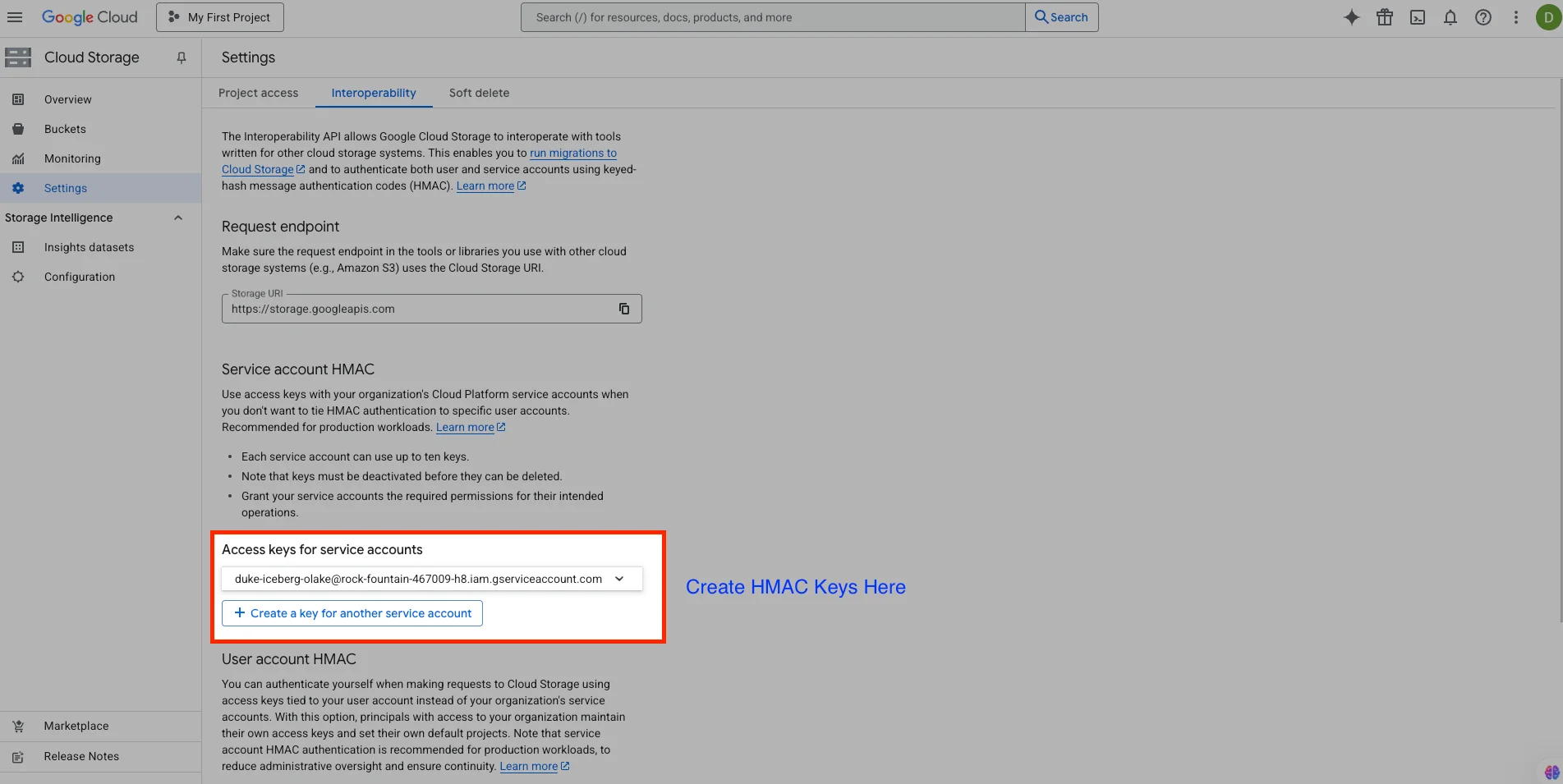

Creation of HMAC Keys

- HMAC keys will act as the access key and secret key for S3 writer.

- In Google Cloud Console, go to storage, then settings, then select Interoperability.

- Copy the request endpoint and provide it as the S3 endpoint in OLake.

- Create HMAC keys for the service account, which will have an access key and corresponding secret key.

- Use those HMAC keys as S3 access key and secret key.

HMAC (Hash-based Message Authentication Code) keys in Google Cloud Storage are used for authentication when accessing GCS resources, particularly through the S3-compatible API. They consist of an access key and a secret key, which provide a way to sign requests and verify identity without using Google account credentials. \

Refer - https://cloud.google.com/storage/docs/authentication/hmackeys

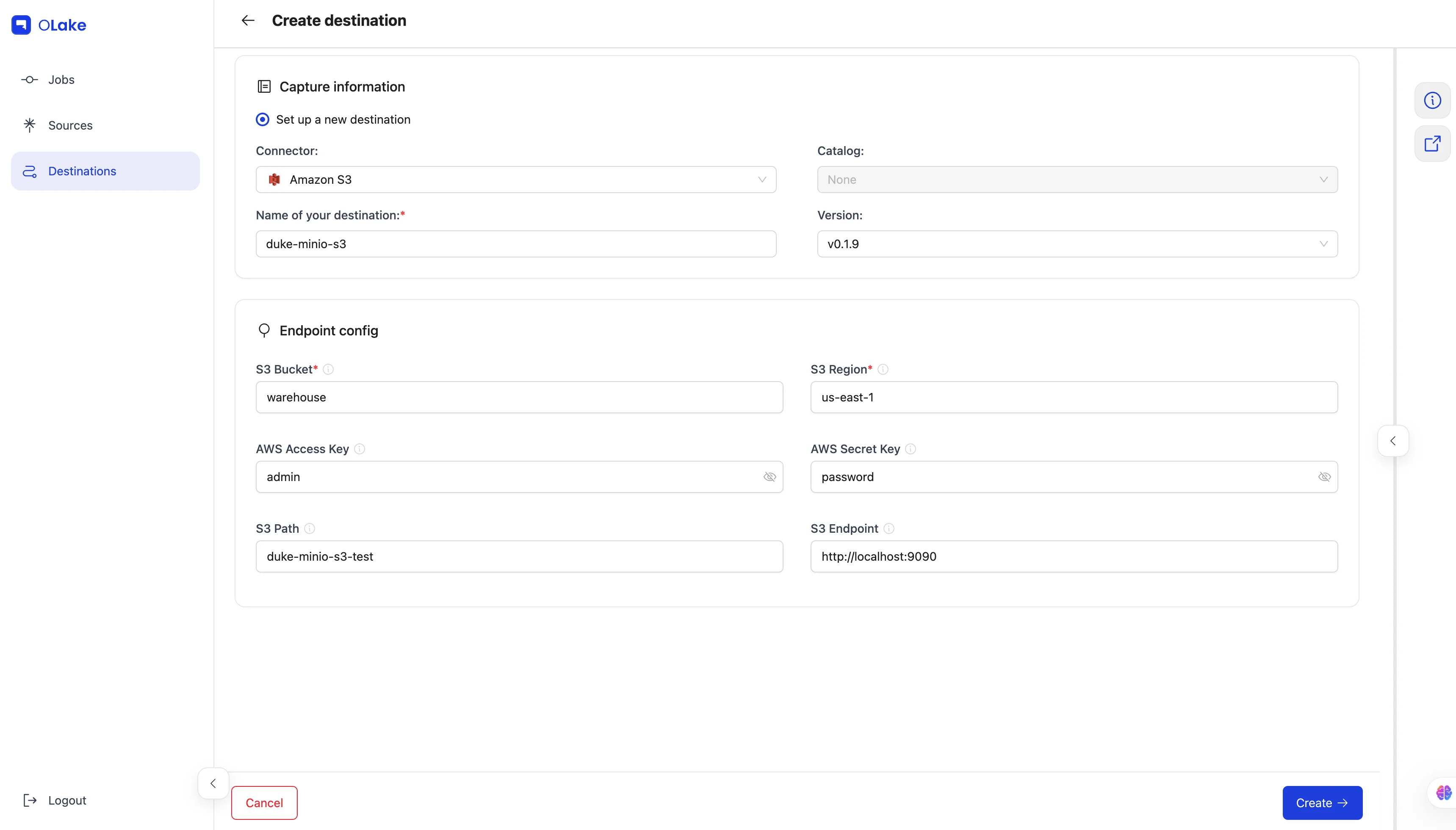

Using Minio S3 Credentials

OLake supports S3-compatible MinIO as well in its S3 destination configuration.

User can create a MinIO service account, create bucket in it, and provide MinIO access key, secret key with bucket URL in the config.

In S3 endpoint, URL through MinIO bucket is accessible has to be provided.

MinIO destination configuration was tested by spinninig up the MinIO docker container and this was in the same directory where OLake's UI backend is present. OLake UI docker container was spin up.

| Key | Description | Data Type | Probable Values |

|---|---|---|---|

| type | Specifies the output file format for writing data. Currently, only the "PARQUET" format is supported. | string | "PARQUET" |

| s3_bucket | The name of the Amazon S3/GCS bucket (without s3:// or gs://) where your output files will be stored. Ensure that the bucket exists and that you have proper access. | string | A valid S3 bucket name (e.g. "olake-s3-test") |

| s3_region | The AWS/GCS region where the specified S3 bucket is hosted. | string | AWS/GCS region codes such as "us-west-2", "ap-south-1", etc. |

| s3_access_key | The AWS/GCS HMAC access key used for authenticating S3 requests. | string | A valid AWS/GCS HMAC access key |

| s3_secret_key | The AWS/GCS HMAC secret key used for S3 authentication. This key should be kept secure. | string | A valid AWS/GCS HMAC secret key |

| s3_endpoint | (Optional) The custom endpoint for S3-compatible services. Required for GCS using HMAC keys. | string | "https://storage.googleapis.com" |

| s3_path | (Optional) The specific path (or prefix) within the S3 bucket where data files will be written. This is typically a folder path that starts with a / (e.g. "/data"). | string | A valid path string |

Create an S3-compatible destination in OLake CLI

To enable S3 or S3 compatible writes, you must create a destination.json file with the configuration parameters listed below. The sample configuration provided here outlines the necessary keys and their expected values.

- Depending upon from where (source - Postgres, Mongodb, MySQL, Oracle) to where you would like to sync the data, you can choose the below S3 destination configurations.

Using AWS Amazon S3 Credentials

- For more information on the keys and value of S3 config, refer to this

S3 Config Info.

{

"type": "PARQUET",

"writer": {

"s3_bucket": "olake-s3-test",

"s3_region": "ap-south-1",

"s3_access_key": "xxxxxxxxxxxxxxxxxxxx",

"s3_secret_key": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"s3_path": "/data"

}

}

Using GCS-compatible S3 Credentials

In this, create HMAC keys through GCS service account and provide s3_endpoint: "https://storage.googleapis.com".

For more information on creation of HMAC keys, refer to this GCP HMAC Keys.

{

"type": "PARQUET",

"writer": {

"s3_bucket": "olake-s3-test",

"s3_region": "ap-south-1",

"s3_access_key": "xxxxxxxxxxxxxxxxxxxx",

"s3_secret_key": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"s3_endpoint": "https://storage.googleapis.com",

"s3_path": "/data"

}

}

Using Minio S3 Credentials

MinIO destination configuration was tested by spinninig up the MinIO docker container and this was in the same directory where OLake's CLI backend is present.

Please change the s3_endpoint in the current config below and provide the actual MinIO storage URL.

{

"type": "PARQUET",

"writer": {

"s3_bucket": "warehouse",

"s3_region": "us-east-1",

"s3_access_key": "xxxxxxxxxxxxxxxxxxxx",

"s3_secret_key": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"s3_endpoint": "http://<MinIO-Storage-Endpoint>:9000"

}

}

Using Local

-

Using Docker

noteIf using docker, mounted path has to be used.

"local_path": "/mnt/config", where "/mnt/config" is the local directory in the Docker container where Parquet files will be stored. This path is mapped to your host file system via a Docker volume.destination.json{

"type": "PARQUET",

"writer": {

"local_path": "/mnt/config"

}

} -

Using Local system

destination.json{

"type": "PARQUET",

"writer": {

"local_path": "./mnt/config"

}

}Key Data Type Example Value Description & Possible Values type string "PARQUET"Specifies the output file format. Currently, only the Parquet format is supported. writer.local_path string "./mnt/config"The directory in user's local machine where Parquet files will be stored. Note: This configuration enables the Parquet local writer. For more details, check out the README section.

- The generated

.parquetfiles use SNAPPY compression (Read more). Note that SNAPPY is no longer supported by S3 Select when performing queries. - OLake creates a test folder named

olake_writer_testcontaining a single text file (.txt) with the content:This is used to verify that you have the necessary permissions to write to S3.S3 write test